Sally’s Thoughts: Why humans are needed to conduct quality reviews and why automated systems on their own don’t cut it

Clarifying External Validation vs. Quality Review in Compliance

We recently received feedback from a client who used an automated tool for external validation. This experience highlighted common misunderstandings in our industry around key compliance terminology—especially the term “validation.” In the context of RTO compliance, validation, as per the Standards, is a post-assessment process that ensures assessment tools consistently produce valid and reliable assessment judgments. However, we often see “validation” mistakenly used interchangeably with “quality review” or “quality assurance.”

When clients request “validation reports” with our learning and assessment packs, we use this as an opportunity to clarify that validation is not simply a quality review. Unlike true validation, a “validation report” provided by some resource suppliers is typically an internal quality review that only confirms the resource provider’s belief that their tools meet quality standards. While quality review is crucial, it does not meet the compliance requirements of validation, which is essential to ensure a tool’s effectiveness after assessment.

Ensuring that both quality review and validation are clearly understood and correctly implemented is vital for compliance and, ultimately, for delivering high-quality training and assessment.

Limitations of Automated Validation and Quality Review Tools in RTO Compliance

As we see more automated validation and quality review tools emerging in the industry, I want to highlight a few important limitations that RTOs should keep in mind. These tools primarily look for keywords and can be helpful in identifying missing content, but they often fail to capture the nuances of effective assessment design. For instance, if an assessment avoids direct unit language to be more relevant to industry professionals and future workers, these tools may flag it as non-compliant, which isn’t always accurate.

From my experience, effective assessments involve more than just keywords—they require contextualisation for the student cohort, clear instructions, accurate benchmarks for consistent judgments, and realistic simulations. Automated systems can’t fully assess these elements. A thoughtfully crafted assessment that omits unit-specific language for a better learning experience might be marked as non-compliant by an automated tool, while a poorly constructed assessment loaded with keywords might slip through as “compliant.”

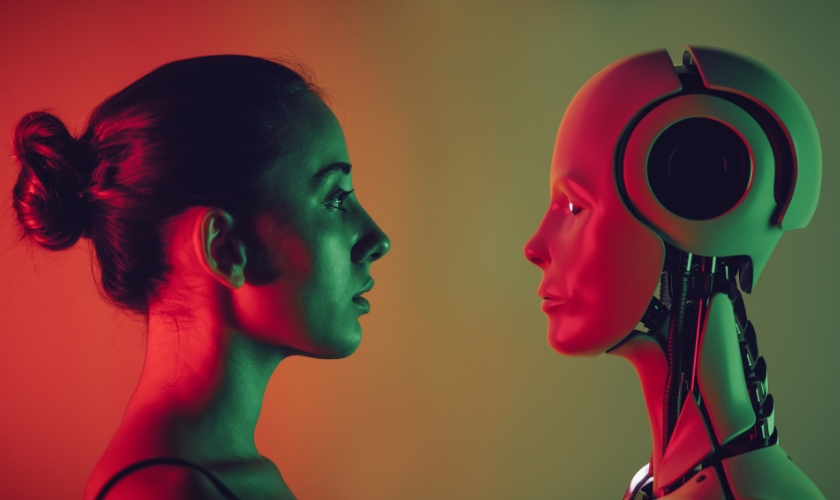

I believe in the value of automation and AI for efficiency, but quality review tools should supplement—not replace—the insight of a qualified compliance expert. Just as I wouldn’t publish content generated by AI without fact-checking and refining it, I don’t recommend relying solely on automated tools for compliance validation. These tools work best when they support an experienced professional who can make informed judgments.

Automated tools are undoubtedly part of the future, but only if there’s an intelligent human at the wheel.

Sally is a leading expert in vocational education and training (VET), with over 20 years dedicated to guiding RTO, CRICOS, and ELICOS providers in achieving and maintaining compliance. As a former auditor and a member of the Training Package Quality Assurance Panel, Sally understands the intricate demands of registration, compliance, and quality assurance.

Her commitment to excellence has driven her to develop high-quality learning and assessment resources that empower training organisations to focus on delivering outstanding student outcomes. Known for her keen eye for detail and comprehensive approach, Sally continues to support VET providers and industry stakeholders with unparalleled expertise and progressive insights.